Lately, I’ve been reminded of a quote that’s often attributed to Charles Darwin: “… It is not the most intellectual of the species that survives; it is not the strongest that survives, but the species that survives is the one that is able best to adapt and adjust to the changing environment in which it finds itself.”

The idea behind this quote remains true and applies well beyond the field of evolutionary biology.

Convergence (version 2.0) is here, and, to survive, we need to adjust, change and adapt to our changing environment. We cannot build networks for today (and for the future) like we have built them in the past, lest we go the way of the dodo bird.

Let’s look at the changes and improvements made since the first converged network (Convergence 1.0).

We established networks in the voice world that operated on high-availability systems we could count on without question. When you picked up the handset, you had a dial tone. With the rapid growth of data networks starting in the ’70s, it was inevitable that the industry would find synergies to allow voice and data telecommunications to exist on the same converged network.

In hindsight, I would argue that the technological and engineering issues were actually the easiest to overcome. The most difficult were the people issues: resistance to or fear of change, ego, protectionism, organizational boundaries, and risk avoidance, to name just a few. As the technologies grew, evolved and improved, so did our understanding. This helped us break down and overcome the people issues. A converged network bringing voice and data together is now the norm.

I’ve had a number of recent discussions with user groups in regard to the Internet of Things (IoT) and the opportunity that new technologies, applications and devices bring to an organization, as well as the challenges that can arise in adapting to this changing environment.

Traditionally, machine-to-machine (M2M) or device-based networks sat outside our converged networks, whether they be for digital building technologies, like video and security; smart cars; industrial networks; or many others.

In an IoT world, those networks still exist, as they always have. They may work on the same physical and/or logical networks with the same cables, boxes, and software, or they may use “like” networks to better interact.

The IoT world is here, and the level and rate of convergence are increasing in volume and velocity. IoT is a nebulous concept – hence all the cloud analogies. It will continue to morph as technologies evolve along with those that use it. Your corporate IoT cloud will look different from mine, and that’s okay.

Will we ever get to a true hyper-converged network where anything can talk to anything at any time? I don’t know – but that’s a people issue, not an engineering one. My lack of understanding or foresight doesn’t mean I don’t need to adjust and prepare for that eventuality. Converged networks will grow as they have; I will grow and adapt, or else I risk the potential of not being able to function in my changing environment.

Which brings me to adapting and adjusting to a changing environment from a network infrastructure frame of mind. Our TIA TR-42 (Telecommunications Cabling Systems ANSI/TIA-568 family), BICSI (TDMM and others) and proprietary or third documents must adapt and adjust. Whether they be specifications, standards or best-practice resources, they must evolve or face irrelevance (extinction, to extend the metaphor).

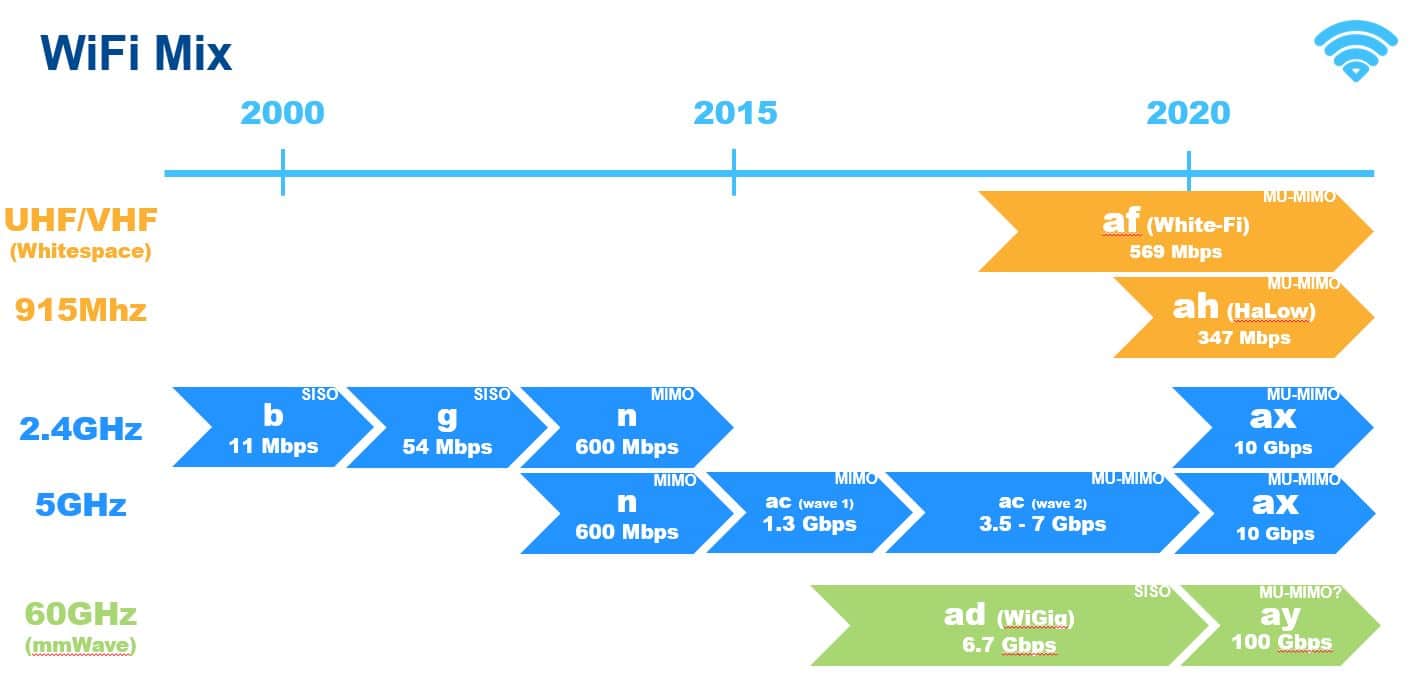

Our converged networks have evolved with higher speeds, higher power and more portability or mobility than ever before. More than any pundit, I remember prognosticating in the ’90s when people were amazed at shared megabit network capabilities and the ability to talk on the phone untethered. Simply creating faster networks, with higher grades of cabling, is not the answer.

Improvements in speed, noise immunity, power, portability, and mobility are all important, but they alone won’t get us where we need to go. We need to think differently, challenge the status quo and create new solutions. We need to adjust and adapt.

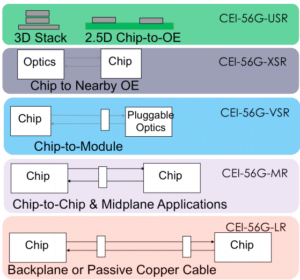

Traditional network guidance has usually centered on human telecommunications, whether directly, through things like voice and video, or indirectly through human-controlled devices, like our computers and tablets. Devices have been communicating through artificial means at least as long as we have, either through mechanical wires, pneumatics, hydraulics, electronic signals or other means. But now those machines are joining us in the digital world; rather than relying on proprietary protocols, they can now run on the same networks that our human-controlled devices do.

The bias toward human-controlled telecommunications is natural given the nature of standards development. Almost every standard defines “user” as a primary consideration when designing networks. Devices, despite having the ability to communicate on the same networks, have noticeably different requirements and, therefore, need different considerations. A one-size-fits-all approach to network design has arguably never worked well; it certainly won’t for our digital buildings and IoT environment of the future.

Using the smart building example, a “user” is a transient device on the network. The user goes home at the end of the day and on holidays, and user groups or customers change over with leases and occupancy changes. The lights, door controls, surveillance, security, mechanical and other digital building systems are effectively permanent fixtures. Our laptops, phones, and tablets are typically refreshed every few years. A building’s systems and technologies are expected to last much longer than that.

Furthermore, the operational risks, concerns, needs, and security requirements are different from “users” to “devices.” A person can get sick or take a vacation; a building cannot. The lights must always turn on, the HVAC systems must always work, the doors must always open, close and secure – without question. Even though a door control, lighting or HVAC system may not require the same bandwidth as a user, it does not mean that their network has lesser requirements. If anything, they may have higher requirements in some areas. If my laptop doesn’t function, I can still connect with my tablet or my phone. If a building doesn’t function, it impacts all the users – not just one.

I know that industry standards and best practices are adapting and adjusting to a new environment. Make sure your practices, specifications, assumptions, and procedures do as well. Otherwise, we risk new technology becoming an impediment to our goals – not through any fault of its own, but rather through how it was implemented. Make sure your team members, both external and internal, remember the lessons of Convergence 1.0 so they can be ready for 2.0, which is happening now. “We’ve always done it this way” might as well have been the mantra of the dodo bird.